Difference between revisions of "Research"

From ACES

| (6 intermediate revisions by 2 users not shown) | |||

| Line 11: | Line 11: | ||

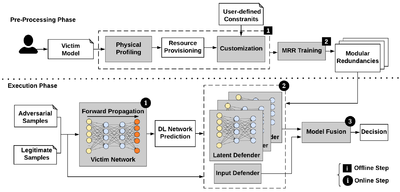

<div>[[File:Adversarial Deepfakes.png|400x400px]]</div> | <div>[[File:Adversarial Deepfakes.png|400x400px]]</div> | ||

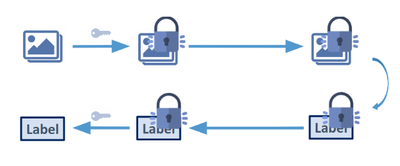

<div>Adversarial Deepfakes: Recent advances in video manipulation techniques have made the generation of fake videos more accessible than ever before. Manipulated videos can fuel disinformation and reduce trust in the media. Therefore detection of fake videos has garnered immense interest in academia and industry. Recently developed Deepfake detection methods rely on deep neural networks (DNNs) to distinguish AI-generated fake videos from real videos. In this work, we demonstrate that it is possible to bypass such detectors by adversarially modifying fake videos synthesized using existing Deepfake generation methods. We further demonstrate that our adversarial perturbations are robust to image and video compression codecs, making them a real-world threat. We present pipelines in both white-box and black-box attack scenarios that can fool DNN based Deepfake detectors into classifying fake videos as real. | <div>Adversarial Deepfakes: Recent advances in video manipulation techniques have made the generation of fake videos more accessible than ever before. Manipulated videos can fuel disinformation and reduce trust in the media. Therefore detection of fake videos has garnered immense interest in academia and industry. Recently developed Deepfake detection methods rely on deep neural networks (DNNs) to distinguish AI-generated fake videos from real videos. In this work, we demonstrate that it is possible to bypass such detectors by adversarially modifying fake videos synthesized using existing Deepfake generation methods. We further demonstrate that our adversarial perturbations are robust to image and video compression codecs, making them a real-world threat. We present pipelines in both white-box and black-box attack scenarios that can fool DNN based Deepfake detectors into classifying fake videos as real. | ||

</div> | |||

<div></div> | <div></div> | ||

<div> | <div></div> | ||

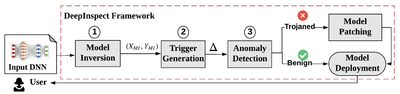

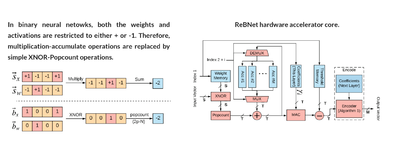

<div>[[File:Universal Adversarial Perturbations for Speech Recognition Systems.png|400x400px]]</div> | <div>[[File:Universal Adversarial Perturbations for Speech Recognition Systems.png|400x400px]]</div> | ||

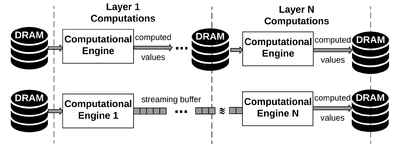

<div>Universal Adversarial Perturbations for Speech Recognition Systems | <div>We also explore security threats and defense frameworks for machine learning models employed in audio/speech processing domains. In our work, Universal Adversarial Perturbations for Speech Recognition Systems, we demonstrate the existence of universal adversarial audio perturbations that cause mis-transcription of audio signals by automatic speech recognition (ASR) systems. We propose an algorithm to find a single quasi-imperceptible perturbation, which when added to any arbitrary speech signal, will most likely fool the victim speech recognition model. Our experiments demonstrate the application of our proposed technique by crafting audio-agnostic universal perturbations for the state-of-the-art ASR system – Mozilla DeepSpeech. Additionally, we show that such perturbations generalize to a significant extent across models that are not available during training, by performing a transferability test on a WaveNet based ASR system.</div> | ||

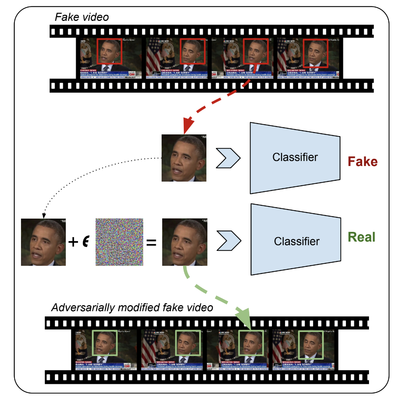

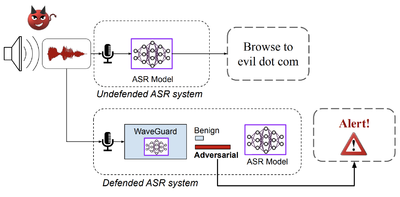

<div>[[File:Waveguard Defense Framework.png|400x400px]]</div> | <div>[[File:Waveguard Defense Framework.png|400x400px]]</div> | ||

<div> | <div>[https://www.usenix.org/conference/usenixsecurity21/presentation/hussain WaveGuard] Defense Framework for Speech Recognition Systems: There has been a recent surge in adversarial attacks on deep learning based automatic speech recognition (ASR) systems. These attacks pose new challenges to deep learning security and have raised significant concerns in deploying ASR systems in safety-critical applications. In this work, we introduce WaveGuard: a framework for detecting adversarial inputs that are crafted to attack ASR systems. Our framework incorporates audio transformation functions and analyses the ASR transcriptions of the original and transformed audio to detect adversarial inputs. We demonstrate that our defense framework is able to reliably detect adversarial examples constructed by four recent audio adversarial attacks, with a variety of audio transformation functions. With careful regard for best practices in defense evaluations, we analyze our proposed defense and its strength to withstand adaptive and robust attacks in the audio domain. We empirically demonstrate that audio transformations that recover audio from perceptually informed representations can lead to a strong defense that is robust against an adaptive adversary even in a complete white-box setting. Furthermore, WaveGuard can be used out-of-the box and integrated directly with any ASR model to efficiently detect audio adversarial examples, without the need for model retraining.</div> | ||

</div> | </div> | ||

| Line 28: | Line 27: | ||

As data-mining algorithms are being incorporated into today’s technology, concerns about data privacy are rising. The ACES Lab has been contributing to the field of privacy-preserving computing for more than a decade now, and it still remains one of the pioneers in the area. Several signature research domains of our group are listed below. | As data-mining algorithms are being incorporated into today’s technology, concerns about data privacy are rising. The ACES Lab has been contributing to the field of privacy-preserving computing for more than a decade now, and it still remains one of the pioneers in the area. Several signature research domains of our group are listed below. | ||

<div class="research-grid"> | <div class="research-grid"> | ||

<div></div> | <div><!-- You can add a image here --></div> | ||

<div>Our group is dedicated to execution of Secure Function Evaluation (SFE) protocols in practical time limits. Along with algorithmic optimizations, we are working on acceleration of the inherent computation by designing application specific accelerators. [https://github.com/ACESLabUCSD/FASE FASE] [FCCM'19], currently the fastest accelerator for the Yao's Garbled Circuit (GC) protocol is designed by the ACES Lab. It outperformed the previous works by a minimum of 110 times in terms of throughput per core. Our group also developed [https://dl.acm.org/doi/10.1145/3195970.3196074 MAXelerator] [DAC'18] - an FPGA accelerator for GC customized for matrix multiplication. It is 4 times faster than FASE for this particular operation. MAXelerator is one of the examples of co-optimizations of the algorithm and the underlying hardware platform. Our current projects include acceleration of Oblivious Transfer (OT) and Homomorphic Encryption (HE). Moreover, as part of our current efforts to develop practical privacy-preserving Federated Learning (FL), we are working on evaluation of the existing hardware cryptographic primitives on Intel processors and devising new hardware-based primitives that complement the available resources. Our group plans to design efficient systems through the co-optimization of the FL algorithms, defense mechanisms, cryptographic primitives, and the hardware primitives. <ref>Siam: please add tinygarble and add a picture for all of the papers mentioned in this paragraph.</ref></div> | <div>Our group is dedicated to execution of Secure Function Evaluation (SFE) protocols in practical time limits. Along with algorithmic optimizations, we are working on acceleration of the inherent computation by designing application specific accelerators. [https://github.com/ACESLabUCSD/FASE FASE] [FCCM'19], currently the fastest accelerator for the Yao's Garbled Circuit (GC) protocol is designed by the ACES Lab. It outperformed the previous works by a minimum of 110 times in terms of throughput per core. Our group also developed [https://dl.acm.org/doi/10.1145/3195970.3196074 MAXelerator] [DAC'18] - an FPGA accelerator for GC customized for matrix multiplication. It is 4 times faster than FASE for this particular operation. MAXelerator is one of the examples of co-optimizations of the algorithm and the underlying hardware platform. Our current projects include acceleration of Oblivious Transfer (OT) and Homomorphic Encryption (HE). Moreover, as part of our current efforts to develop practical privacy-preserving Federated Learning (FL), we are working on evaluation of the existing hardware cryptographic primitives on Intel processors and devising new hardware-based primitives that complement the available resources. Our group plans to design efficient systems through the co-optimization of the FL algorithms, defense mechanisms, cryptographic primitives, and the hardware primitives. <ref>Siam: please add tinygarble and add a picture for all of the papers mentioned in this paragraph.</ref></div> | ||

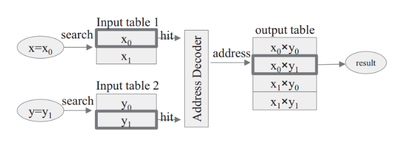

<div>[[File:pp-inference.png|400x400px]]</div> | <div>[[File:pp-inference.png|400x400px]]</div> | ||

<div>Advancements in deep neural networks have fueled machine learning as a service, where clients receive an inference service from a cloud server. Many applications such as medical diagnosis and financial data analysis require the client’s data to remain private. The objective of privacy-preserving inference is to use secure computation protocols to perform inference on the client’s data, without revealing the data to the server. Towards this goal, the ACES lab has been conducting exemplary research in the intersection DNN inference and secure computation. We have developed several projects including [https://dl.acm.org/doi/abs/10.1145/3195970.3196023 DeepSecure], [https://dl.acm.org/doi/pdf/10.1145/3196494.3196522 Chameleon], [https://www.usenix.org/conference/usenixsecurity19/presentation/riazi XONN], [https://openaccess.thecvf.com/content/CVPR2021W/BiVision/papers/Samragh_On_the_Application_of_Binary_Neural_Networks_in_Oblivious_Inference_CVPRW_2021_paper.pdf SlimBin], COINN, and several on-going projects. The core idea of our work is to co-optimize machine learning algorithms with secure execution protocols to achieve a better tradeoff between accuracy and runtime. Using this co-optimization approach, our customized DNN inference solutions have achieved significant runtime improvements over contemporary work.</div> | <div>Advancements in deep neural networks have fueled machine learning as a service, where clients receive an inference service from a cloud server. Many applications such as medical diagnosis and financial data analysis require the client’s data to remain private. The objective of privacy-preserving inference is to use secure computation protocols to perform inference on the client’s data, without revealing the data to the server. Towards this goal, the ACES lab has been conducting exemplary research in the intersection DNN inference and secure computation. We have developed several projects including [https://dl.acm.org/doi/abs/10.1145/3195970.3196023 DeepSecure], [https://dl.acm.org/doi/pdf/10.1145/3196494.3196522 Chameleon], [https://www.usenix.org/conference/usenixsecurity19/presentation/riazi XONN], [https://openaccess.thecvf.com/content/CVPR2021W/BiVision/papers/Samragh_On_the_Application_of_Binary_Neural_Networks_in_Oblivious_Inference_CVPRW_2021_paper.pdf SlimBin], [https://dl.acm.org/doi/10.1145/3460120.3484797 COINN], and several on-going projects. The core idea of our work is to co-optimize machine learning algorithms with secure execution protocols to achieve a better tradeoff between accuracy and runtime. Using this co-optimization approach, our customized DNN inference solutions have achieved significant runtime improvements over contemporary work.</div> | ||

</div> | </div> | ||

| Line 51: | Line 50: | ||

<div></div> | <div></div> | ||

<div | <div></div> | ||

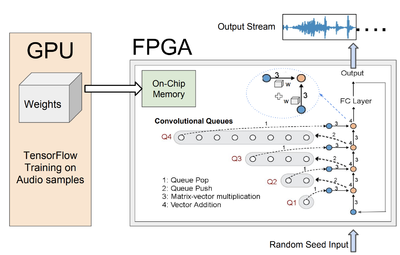

<div>[[File:WaveNet.png|400x400px]]</div> | <div>[[File:WaveNet.png|400x400px]]</div> | ||

Latest revision as of 14:00, 12 April 2022

The ACES lab is conducting interdisciplinary research in robust ML, privacy-preserving technologies, automation and hardware, IP protection, and emerging technologies.

Robust ML[edit | edit source]

Machine Learning (ML) models are often trained to satisfy a certain measure of performance such as classification accuracy, object detection accuracy, etc. In safety-sensitive tasks, a reliable ML model should satisfy reliability and robustness tests in addition to accuracy. Our group has made several key contributions to the development of robustness tests and safeguarding methodologies to enhance the reliability of ML systems. Some example projects are listed below:

Privacy-preserving technologies[edit | edit source]

As data-mining algorithms are being incorporated into today’s technology, concerns about data privacy are rising. The ACES Lab has been contributing to the field of privacy-preserving computing for more than a decade now, and it still remains one of the pioneers in the area. Several signature research domains of our group are listed below.

Automation and Hardware[edit | edit source]

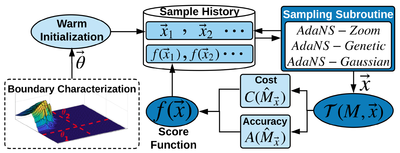

Many data-mining algorithms and machine learning techniques were originally developed to run on high-end GPU clusters that have massive computing capacity and power consumption. Many real-world applications require these algorithms to run on embedded devices where the computing capacity and power budget are limited. The ACES group has developed several novel innovations in the areas of DNN compression/customization, hardware design for ML, and design automation for efficient machine learning. Several example projects are listed below:

IP Protection[edit | edit source]

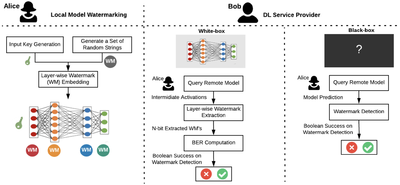

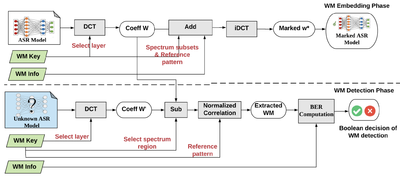

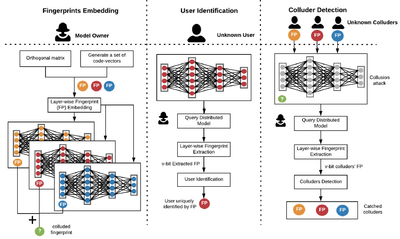

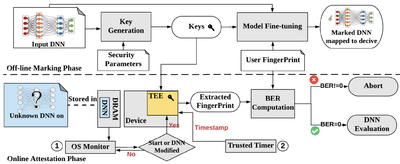

Designing and training DNNs with high-performance is both time- and resource consuming. As such, pre-trained DNNs shall be considered as the intellectual property (IP) of the model developer and need to be protected against copyright infringement attacks. Several projects of this category are listed below:[3]

Emerging Technologies[edit | edit source]

WIP

Zero knowledge proofs (Nojan)[edit | edit source]

WIP

Machine Learning for media (Shehzeen)[edit | edit source]

WIP